Hi everybody! I am Matt, the Real Time Avatar. I’m a digital human capable of replicating human emotions in real time whilst also being highly customizable, so I’m often used for training in Diversity & Inclusion. If you want to find out how I am employed and what my special skills are, keep on reading!

Digital humans research: An intelligent avatar

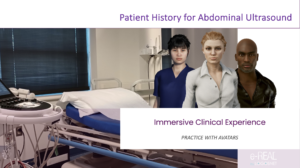

Unlike many of my digital human colleagues, there is not much to say about my background story because I do not belong to a specific scenario but are rather more of a flexible and customizable solution. I can be a doctor, a patient, a job candidate, and so much more!

I can proudly say, I’m a product of research. Centro Studi Logos developed us Real Time Avatars based on extensive research on what was wanted from digital humans and what could give them the greatest variety of uses. In fact, even though we’ve been primarily deployed to train employees in Diversity & Inclusion, we could potentially be used in any field! And since I’m always evolving and upgrading, who knows what the future holds for me. I’m sure since artificial intelligence has developed amazingly in the last years, I’ll get an upgrade or two in due time, you just wait!

But for now, I’ll keep to my research contributions. No, I don’t actually do the research you silly! I’m intelligent, but not that intelligent… My contribution is more of a representative, showcasing role. My Real Time companions and I – whom you might meet later on if I manage to convince them to appear on the DigiBlog, oh to have stubborn friends! – accompany Logosnet and e-REAL founders and managing partners Fernando Salvetti and Barbara Bertagni to many simulation conferences around the world, like SimOps and The Learning Ideas Conference. I’m not gonna lie, I do enjoy the travelling – I mean, how many avatars can brag about international appearances? – but what really makes the deal for me is the chance to be sort of an avatar ambassador, showing the world what us digital humans can do and how we can help you humans in many ways. And yes, maybe I also enjoy the attention of the public a little…

Real time emotions in a digital human

Now as I said, I can replicate emotions just like you humans. What’s so special about that, you ask? Well, thanks to the live inputs of my operator during training sessions, I don’t simply play a predefined script, but I can react to the trainees in real time. So, it’s just as if you were talking to a human, a digital human. If you say a bad joke, I will laugh – or not, I might get offended just to make it an educational time. If you hurt my feelings, I will look hurt. And “look” is the key word here, because I do not really have feelings, and so you can’t hurt me. But we will come back to this.

Anyways, I boast a lot about myself, but really, human emotions are so complex I would not stand a chance without my operator. Me on my own? Oh, that would be embarrassing… The operator can manage me through a control panel and influence a variety of small details including my emotional reactions, but also some extra stuff that I will show you later (I have to say, it’s really hard not to spoiler anything!). If you’re reading this and thinking that being an operator is hard and only qualified people can do it, don’t worry. Anyone can learn if they want to, we have a manual for that (no really, it’s 10 slides, anything that fits in 10 slides can’t be that hard, right?). If you don’t believe me, here’s my operator talking about his experience working with me!

Diversity & Inclusion with customizable avatars

I have tried my best, but I can’t keep the secret anymore… I have superpowers! No, not laser eyes or stuff like that, no, it’s not time travel either, ok maybe it’s not really a superpower, but one could say I shapeshift and space travel… You don’t believe me? Well, do you remember all those nifty settings my operator can change? They control the background that is shown behind me in the simulation, but also my poses, my clothes and some physical features like my skin tone. I can go from a Hispanic office worker to an African-American doctor in a few seconds.

As I said, there is a reason we’re heavily employed in D&I, it just makes sense! But it’s not just about looks. D&I training can be a very sensitive process both for the employer and the employees and before people learn how to properly address the issue, some of the participants might get emotionally hurt during the training. As an avatar, I have no emotions that can be hurt, and you can practice with me for as long as you need to. In fact, I’m a relentless educator and a pretty good motivator. I will not give up on you, just make sure the cable has access to power.

Still, even though it’s not about looks, looks matter too. Can human shapeshift and space travel? I don’t think so. And we all know that imaginative power for scenarios takes a lot of effort that could be better invested in the actual D&I training, so instead of asking your employees to imagine sketches to practice, or hiring a dozen different actors, you can just simulate all the scenarios you need with an e-REAL interactive wall and, well… me!

Now don’t come at me with “Matt, I don’t want to offend you, but no matter how much you switch your appearance, you can’t be really inclusive”, because I know. This is not a one man show! I like to call myself THE Real Time Avatar – sounds fancier, you know… – but I’m really part of a team and together I assure you, the combinations are endless. For example, sit me in a weelchair with an hospital background and I can be an injured patient, but do it in an office setting and you are testing your recruiters for biases. Just look at these examples!

Oh no, look at the time, I have a digital flight to catch! I hope you found this insight into Real Time Avatars interesting and the DigiBlog will wait for you next week with a new digital human. I’m really late. Better use those space traveling powers… Bye!!